Arm MAP Profiler at TACC

Last update: May 08, 2020

Arm MAP is a profiler for parallel, multithreaded or single threaded C, C++, Fortran and F90 codes. MAP provides in-depth runtime analysis and bottleneck pinpointing to the source code line. MAP is available on all TACC compute resources. Use the MAP Profiler with the DDT Debugger to develop and analyze your HPC applications.

Set up Profiling Environment

Before running MAP, the application code must be compiled with the -g option as shown below:

login1$ mpif90 -g mycode.f90or

login1$ mpiCC -g mycode.cLeave in any optimization flags to ensure that optimization is still enabled. If there were no other optimization flags, add the -O2 flag. Otherwise, the -g flag by itself will drop the default optimization from -O2 to -O0.

Follow these steps to set up your profiling environment on Frontera, Stampede3, Lonestar6 and other TACC compute resources.

-

Enable X11 forwarding. To use the MAP GUI, ensure that X11 forwarding is enabled when you ssh to the TACC system. Use the

-Xoption on the ssh command line if X11 forwarding is not enabled in your ssh client by default.localhost$ ssh -X username@stampede2.tacc.utexas.edu -

Load the appropriate MAP module on the remote system along with any other modules needed to run the application:

$ module load map_skx mymodule1 mymodule2 # on Stampede3 load "map_skx" $ module load map mymodule1 mymodule2 # on all other resources load "map" -

Start the profiler.

$ map myprogramIf this error message appears...

map: cannot connect to X server...then X11 forwarding was not enabled or the system may not have local X11 support. If logging in with the

-Xflag doesn't fix the problem, please contact the help desk for assistance. -

Click the "Profile a Program" button in the "arm FORGE" window if the "Run" window did not open.

-

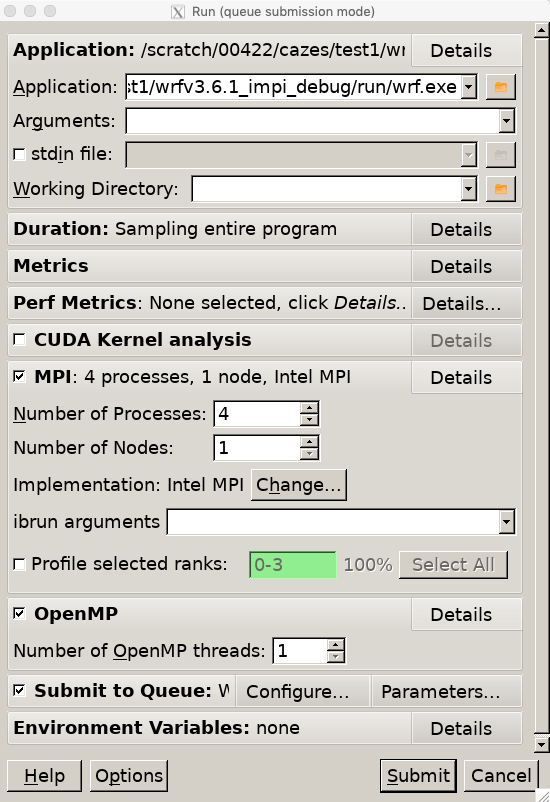

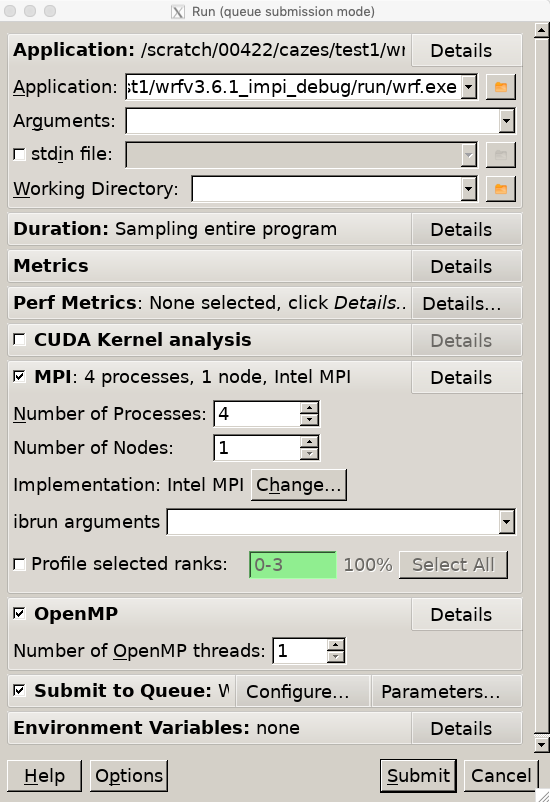

Specify the executable path, command-line arguments, and processor count in the "Run" window. Once set, the values persist from one session to the next.

-

Select each of the "Change" buttons in this window, and adjust the job parameters, as follows:

-

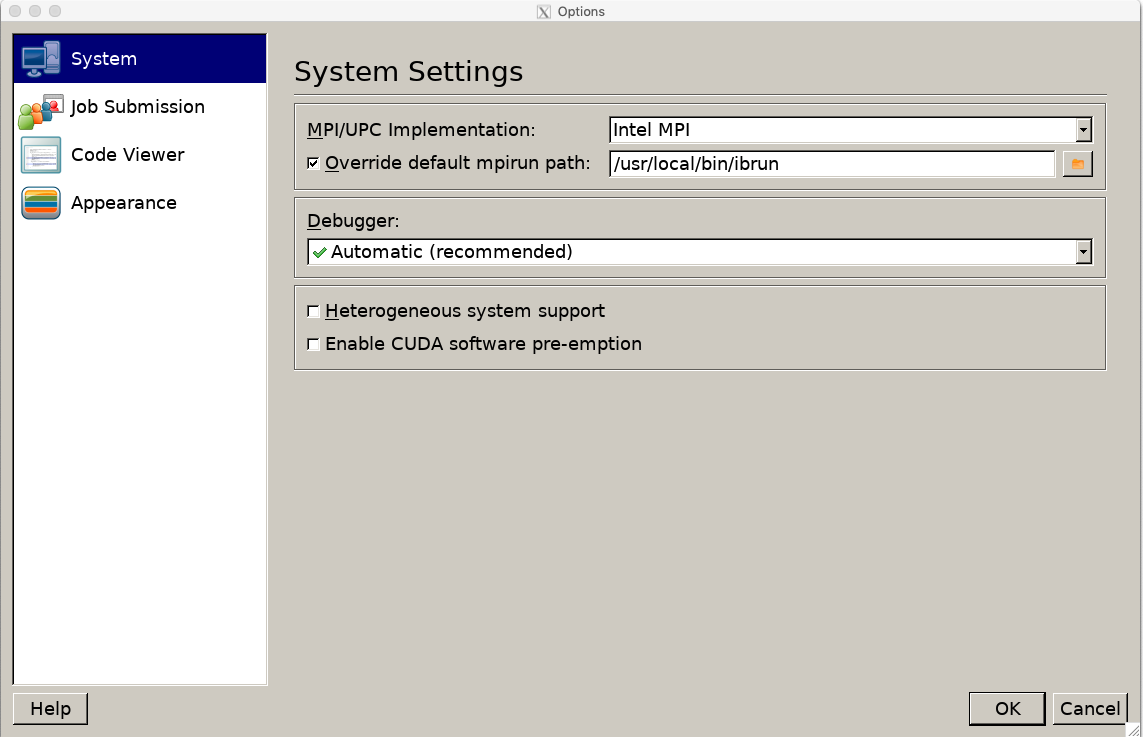

With the "Options" change button, set the MPI Implementation to either "Intel MPI" or "mvapich2", depending on which MPI software stack you used to compile your program. Click OK.

-

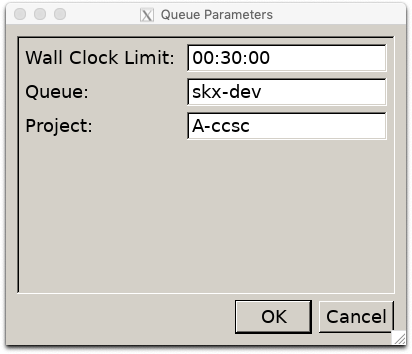

In the "Queue Submission Parameters" window, fill in all the following fields:

Queue default queue is skxfor Stampede3, anddevelopmentfor other systemsTime (hh:mm:ss) Project Allocation/Project to charge the batch job to You must set the Project field to a valid project id. When you login, a list of the projects associated with your account and the corresponding balance should appear. The "Cores per node" setting controls how many MPI tasks will run on each node. For example, if you wanted to run with 4 MPI tasks per node and 4 OpenMP threads per task, this would be set to "4way". Click OK, and you will return to the "Run" window.

-

-

Back in the "Run" window, set the total number of tasks you will need in the "Number of processes" box and the number of nodes you will be requesting. If you are running an OpenMP program, set the number of OpenMP threads also.

-

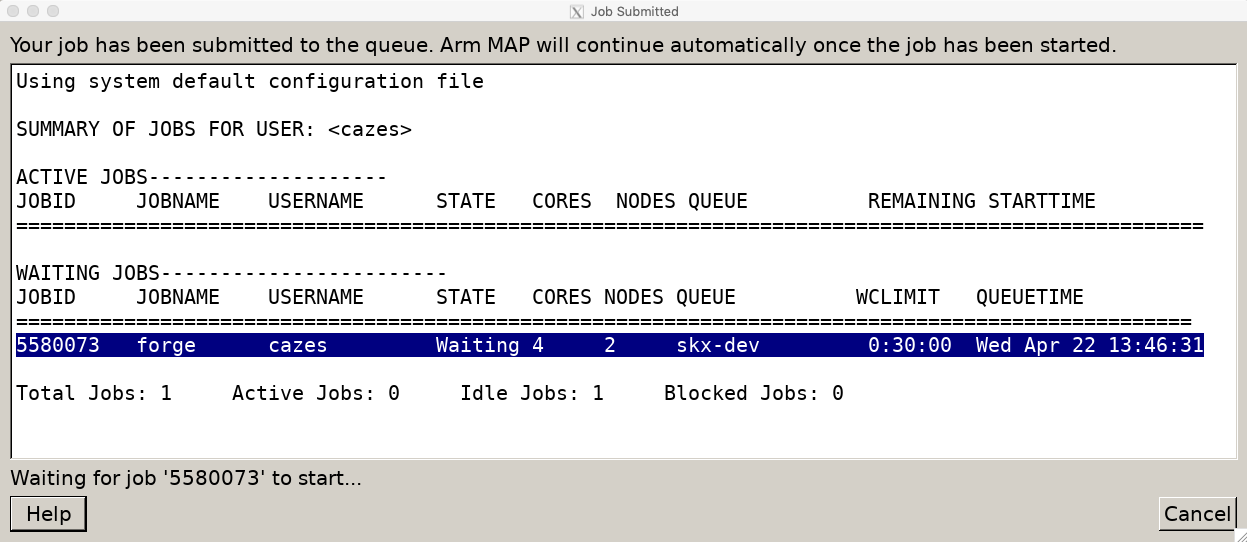

Finally, click "Submit". A submitted status box will appear.

Running MAP

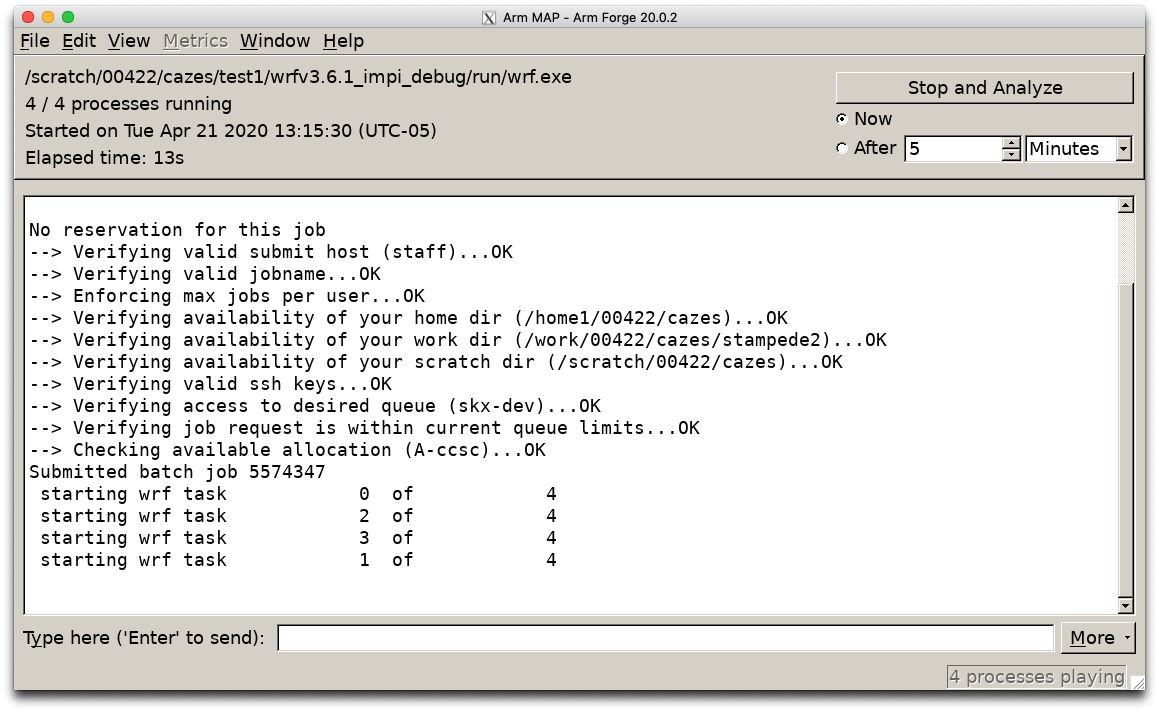

Once your job is launched by the SLURM scheduler and starts to run, the MAP window will switch to show stdout/stderr. At this point, you can also stop execution of the program to analyze the collected profiling data.

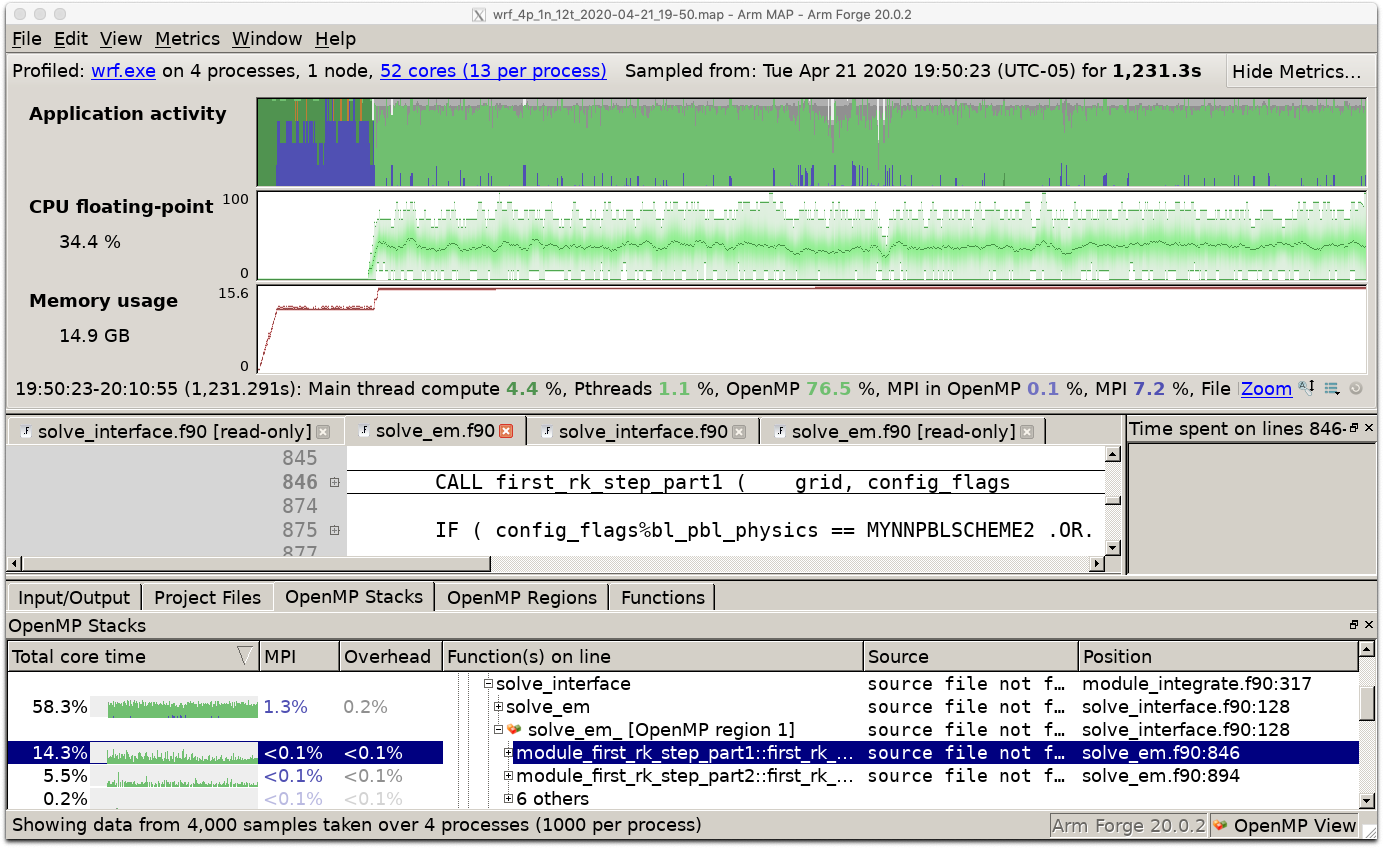

After your code completes, MAP will run an analysis of the profiling data collected, and then open the MAP GUI. This screen shows a time progression of the profiled code showing activity, floating point and memory usage. Other timelines, such as IO, MPI, or Lustre, may be added by clicking on the "Metrics" menu. If you compiled with -g and MAP can find the source code, a code listing will also be available.

MAP with Reverse Connect

By starting MAP from a login node you let it use X11 graphics, which can be slow. Using a VNC connection or the visualization portal is faster, but has its own annoyances. Another way to use MAP is through a MAP's Remote Client using "reverse connect". The remote client is a program running entirely on your local machine, and the reverse connection means that the client is contacted by the MAP program on the cluster, rather than the other way around.

-

Download and install the ARM Forge remote client. The client version and the MAP version on the TACC cluster must correspond.

-

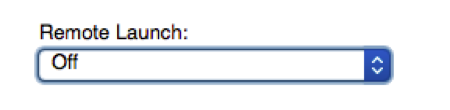

Under "Remote Launch" make a new configuration:

-

Fill in your login name and the cluster to connect to, for instance

stampede2.tacc.utexas.edu. The remote installation directory is stored in the$TACC_MAP_DIRenvironment variable once the module is loaded. -

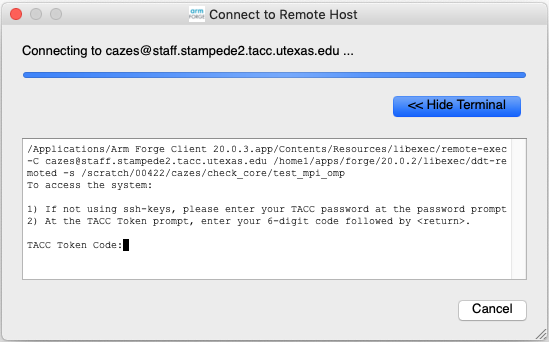

Make the connection; you'll be prompted for your password and two-factor code:

-

From any login node, submit a batch job where the ibrun line is replaced by:

login1$ map --connect -n 123 ./yourprogram -

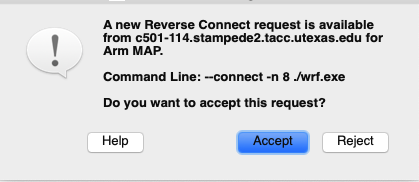

When your batch job (and therefore your MAP execution) starts, the remote client will ask you to accept the connection:

Your MAP session will now use the remote client.